GNU/Linux openSUSE Installing Hadoop – QuickStart Guide

Hi! The Tutorial shows you Step-by-Step How to Install Apache Hadoop in openSUSE GNU/Linux Desktop.

Especially relevant: this guide shows an Apache Hadoop openSUSE Setup Vanilla in Pseudo-Distributed mode.

First, Hadoop is a distributed master-slave that consists of the Hadoop Distributed File System (HDFS) for storage and Map-Reduce for computational capabilities.

And Hadoop Distributed File System (HDFS) is a distributed file system that spreads data blocks across the storage defined for the Hadoop cluster.

Moreover, the foundation of Hadoop is the two core frameworks YARN and HDFS. These two frameworks deal with Processing and Storage.

The Guide Describe a System-Wide Setup with Root Privileges but you Can Easily Convert the Procedure to a Local One.

Finally, the Tutorial’s Contents and Details are Expressly Essentials to Give Focus to the Essentials Instructions and Commands.

-

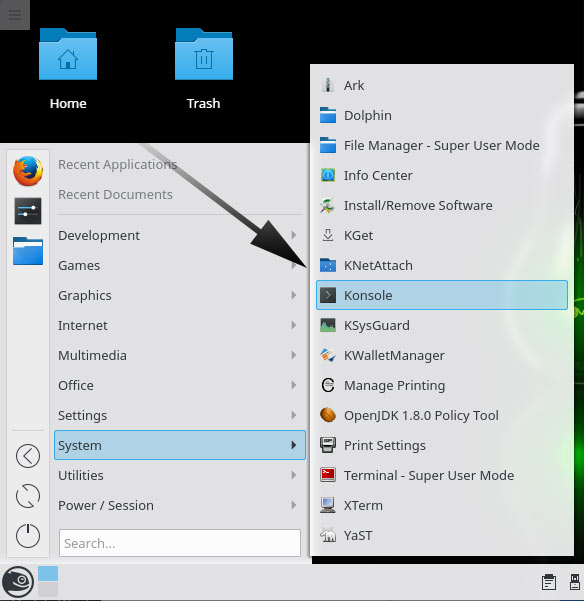

1. Launching Terminal

Open a Shell Terminal emulator window

(Press “Enter” to Execute Commands)

Contents