GNU/Linux Ubuntu 16.04 Installing CUDA 11 – Step by step Guide

How to Install NVIDIA CUDA 11.x Toolkit on Ubuntu 16.04 Xenial LTS 64-bit GNU/Linux desktops – Step by step Tutorials.

And the Ubuntu 16.04 CUDA 11 Setup is a Parallel Computing Platform and Programming Model invented by NVIDIA.

It enables Dramatic Increases in Computing Performance by Harnessing the Power of the Graphics Processing Unit (GPU).

With CUDA Programming you can GPU Accelerating Apps by Incorporating C, C++ and Fortran Extensions of these Languages in the Form of a few Basic Keywords.

Here are some Key Features of CUDA:

- Parallel Computing Architecture: CUDA is built on a parallel computing architecture that allows developers to execute computational tasks in parallel on NVIDIA GPUs. This architecture consists of thousands of parallel processing cores that can handle multiple tasks simultaneously, enabling high-performance computing (HPC) and accelerating complex calculations.

- GPU Acceleration: CUDA enables developers to offload computationally intensive tasks from the CPU to the GPU, leveraging the massive parallel processing capabilities of modern NVIDIA GPUs. This can lead to significant speedups in applications such as scientific simulations, image processing, machine learning, and data analytics.

- CUDA Toolkit: The CUDA Toolkit is a comprehensive development environment provided by NVIDIA for building GPU-accelerated applications with CUDA. It includes compilers, libraries, debugging tools, and other utilities needed for CUDA development. The CUDA Toolkit supports various programming languages, including C, C++, Fortran, Python, and others.

- CUDA C/C++ Language Extensions: CUDA extends the C and C++ programming languages with GPU-specific features and syntax for writing parallel code. Developers can define custom kernels (functions executed on the GPU) using CUDA C/C++ syntax, and launch these kernels to execute parallel computations on the GPU.

- CUDA Libraries: NVIDIA provides a set of optimized libraries that leverage CUDA for specific computational tasks, such as linear algebra (cuBLAS), Fast Fourier Transform (cuFFT), sparse matrix operations (cuSPARSE), deep learning (cuDNN), and more. These libraries offer high-performance implementations of common algorithms for GPU acceleration.

- CUDA Runtime API: The CUDA Runtime API provides a set of functions for managing GPUs, allocating memory, launching kernels, synchronizing threads, and other runtime operations. Developers can use the CUDA Runtime API to interact with CUDA-enabled GPUs and execute parallel code from their applications.

- Ecosystem and Community: CUDA has a vibrant ecosystem and community of developers, researchers, and enthusiasts who contribute to its development, share knowledge and resources, and collaborate on GPU-accelerated projects. NVIDIA actively supports the CUDA community through forums, documentation, tutorials, and developer programs.

- Applications: CUDA is widely used in various fields, including scientific computing, computational physics, computer graphics, artificial intelligence, machine learning, data science, finance, and more. Many popular software applications and frameworks leverage CUDA for GPU acceleration, including MATLAB, TensorFlow, PyTorch, and OpenCV.

Finally, is available also a CUDA Runtime API that is very similar to the Driver.

-

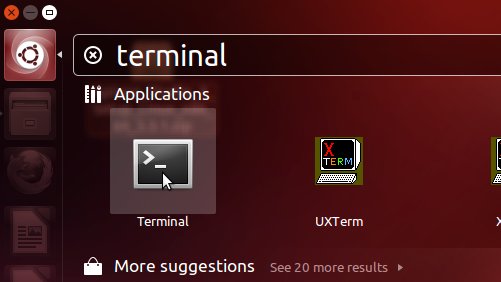

1. Launching Terminal

Open a Terminal Shell emulator window

Ctrl+Alt+t on desktop

(Press “Enter” to Execute Commands).In case first see: Terminal QuickStart Guide.

Contents