How to Install

-

2. Installing Ollama

Then to Set up Ollama on Mint

Simply play:curl -fsSL https://ollama.com/install.sh | sudo sh

Stuff is by default installed on “/usr/local” Directory.

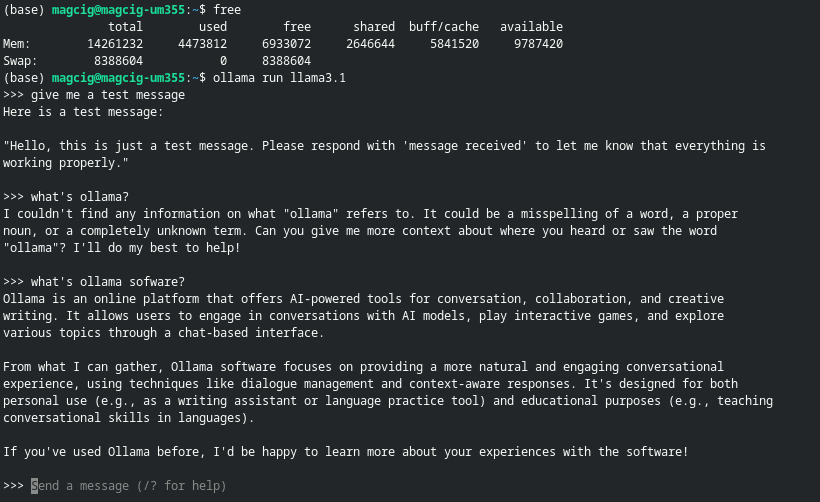

Last, to Run a Chat with Llama 3.1:ollama run llama3.1

The first time you’ll need to wait until it Pull down the Model…

After at the prompt you can Question it as would with ChatGPT :)

-

3. Managing Ollama

Next to Manage Ollama Service

To check the Status:systemctl status ollama

Then to get it started at Boot:

sudo systemctl enable ollama

Consequently to Start/Stop it:

sudo systemctl start ollama

sudo systemctl stop ollama

By default it run over the 11434 Port.

-

4. Ollama Getting Started Guide

Getting Started with Ollama for Mint GNU/Linux

I’m Truly Happy if This Guide Helped You Get Started with Ollama on Linux Mint!

Contents