Install Hadoop for Kubuntu 13.04 Raring 32/64-bit

Hi! The Tutorial shows you Step-by-Step How to Install and Getting-Started with Apache Hadoop/Map-Reduce vanilla in Pseudo-Distributed mode on Linux Kubuntu 13.04 Raring i386/amd64 Desktop.

The Guide Describe a System-Wide Setup with Root Privileges but you Can Easily Convert the Procedure to a Local One.

The Contents and Details are Expressly Essentials to Give Focus Only to the Essentials Instructions and Commands.

-

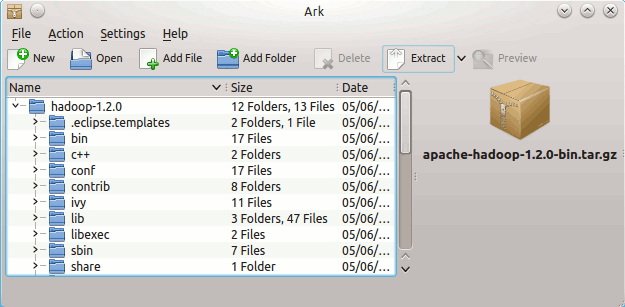

Download Latest Apache Hadoop Stable Release:

Here Apache Hadoop Binary tar.gzLink to Download Latest Apache Hadoop Stable tar.gz Archive Release -

Right-Click on Archive > Open with Ark

Then Extract Into /tmp.

-

Open Terminal Window

(Press “Enter” to Execute Commands)

In case first see: Terminal QuickStart Guide.

-

Relocate Apache Hadoop Directory

Copysudo su

If Got “User is Not in Sudoers file” then see: How to Enable sudo

Copymv /tmp/hadoop* /usr/local/

Copyln -s /usr/local/hadoop* /usr/local/hadoop

Copymkdir /usr/local/hadoop/tmp

Copysudo chown -R root:root /usr/local/hadoop*

-

How to Install Oracle Official Java JDK on Kubuntu

-

Set JAVA_HOME in Hadoop Env File

Copynano /usr/local/hadoop/conf/hadoop-env.sh

Inserts:

export JAVA_HOME=/usr/lib/jvm/<oracleJdkVersion>

Ctrl+x to Save & Exit :)

-

Configuration for Pseudo-Distributed mode

Copynano /usr/local/hadoop/conf/core-site.xml

The Content Should Look Like:

<?xml version=”1.0″?>

<?xml-stylesheet type=”text/xsl” href=”configuration.xsl”?>

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:8020</value>

</property>

</configuration>Next:

Copynano /usr/local/hadoop/conf/hdfs-site.xml

The Content Should Look Like:

<?xml version=”1.0″?>

<?xml-stylesheet type=”text/xsl” href=”configuration.xsl”?>

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<!– specify this so that running ‘hdfs namenode -format’

formats the right dir –>

<name>dfs.name.dir</name>

<value>/usr/local/hadoop/cache/hadoop/dfs/name</value>

</property>

</configuration>Latest:

Copynano /usr/local/hadoop/conf/mapred-site.xml

The Content Should Look Like:

<?xml version=”1.0″?>

<?xml-stylesheet type=”text/xsl” href=”configuration.xsl”?>

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:8021</value>

</property>

</configuration> -

SetUp Path & Environment

Copysu <myuser>

Copycd

Copynano .bashrc

Inserts:

HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbinThe JAVA_HOME is Set Following Oracle Java JDK6+ Installation Version…

Then Load New Setup:

Copysource $HOME/.bashrc

-

SetUp Needed Local SSH Connection

Copysudo systemctl start ssh

Generate SSH Keys to Access:

Copyssh-keygen -b 2048 -t rsa

Copyecho "$(cat ~/.ssh/id_rsa.pub)" > ~/.ssh/authorized_keys

Testing Connection:

Copyssh 127.0.0.1

-

Formatting HDFS

Copyhdfs namenode -format

-

Starting Up Hadoop Database

Copystart-all.sh

-

Apache Hadoop Database Quick Start Guide