GNU/Linux Ubuntu 16.04 Installing Apache Hadoop – QuickStart Guide

Hi! The Tutorial shows you Step-by-Step How to Install and Getting-Started with Apache Hadoop/Map-Reduce vanilla in Pseudo-Distributed mode on Ubuntu 16.04 Xenial LTS GNU/Linux Desktop/Server.

Hadoop is a distributed master-slave that consists of the Hadoop Distributed File System (HDFS) for storage and Map-Reduce for computational capabilities.

And the Guide Describe a System-Wide Setup with Root Privileges but you Can Easily Convert the Procedure to a Local One.

The Apache Hadoop for Ubuntu 16.04 Xenial Require an Oracle JDK 8+Installation on System.

Finally, this guide includes detailed instructions about to Getting-Started with Hadoop on Ubuntu.

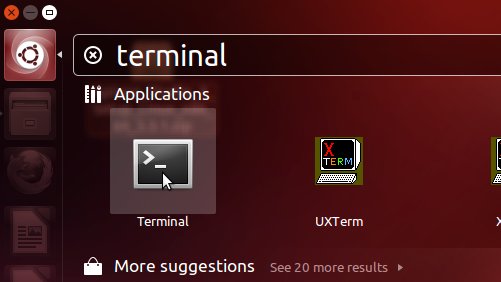

1. Accessing Shell

Open a Terminal Shell Emulator Window:

Ctrl+Alt+t on Desktop

(Press “Enter” to Execute Commands)Or Login into Server Shell.

Contents